Distributed Containerised Infrastructure

16/10/2018, by Justin Cook

Distributed Containerised Infrastructure

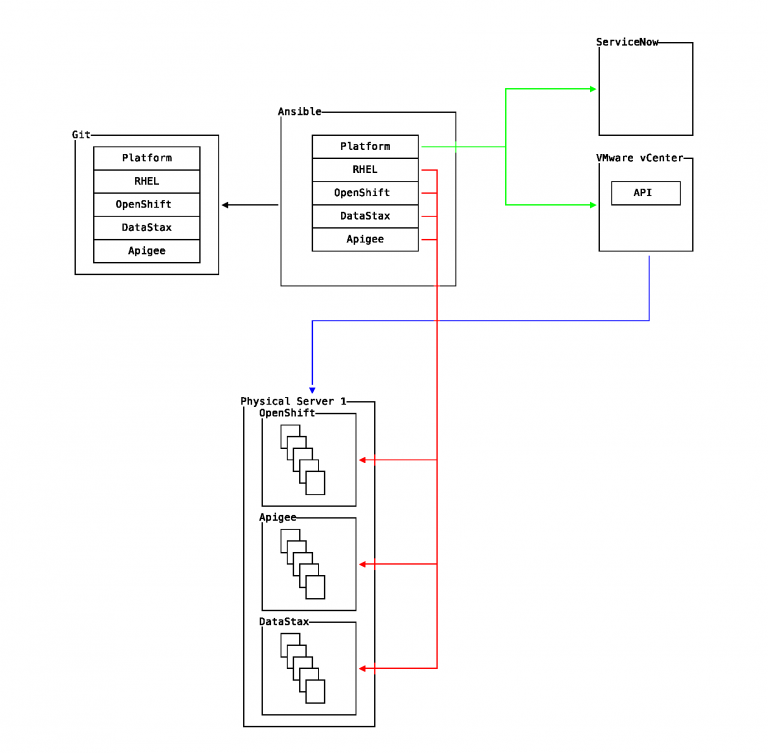

Many designs use Red Hat Enterprise Linux as the environment for delivery. Interesting designs use software platform components such as OpenShift, Apigee, and DataStax Cassandra. Combined, they provide encompassing programmatic access for releasable artefacts such as Docker containers, API proxies, storage et al.

Software Delivery

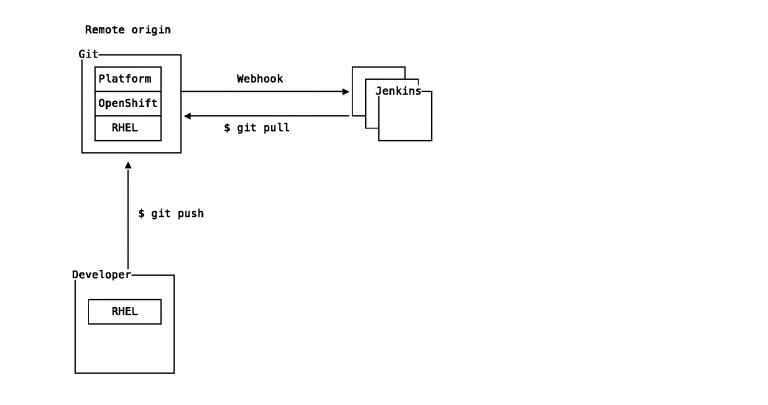

Software is delivered as pipelines. Simply put, pipelines are definitions of integrating upstream changes, building, testing, and producing releasable artefacts. Continuous integration and continuous delivery, or CI/CD, has become the well-known pithy description of this process.

Orchestration and Automation

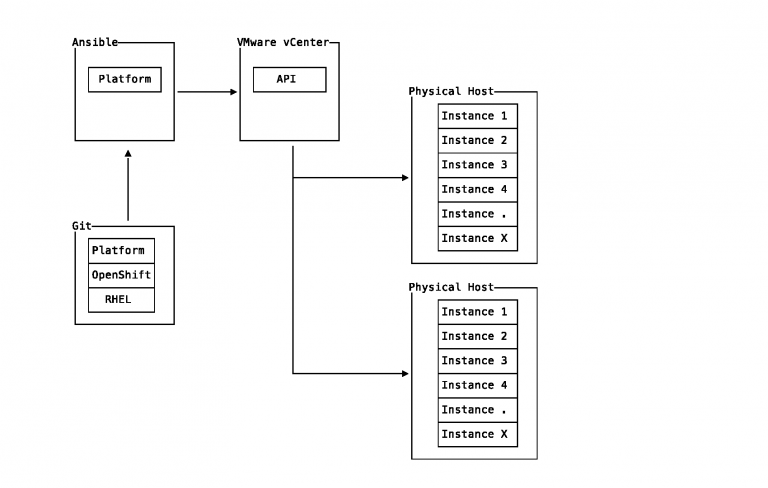

Infrastructure has become software defined. Not only have appliances, storage, and networks converged in software, but management is delivered declarative by infrastructure as code, or IaC. This code is broken down to idempotent tasks that define a state — not how to achieve this state. Each task may operate on an individual platform or invoke an API in a broker pattern.

To minimise integration issues caused largely by technical debt, or changes not realised in code, immutable infrastructure was forged. Components are replaced on every deployment rather than in-place updates. Use of software to describe changes using these patterns has led to incredible efficiencies in delivery of infrastructure.

To realise complex systems in code, a source of truth combined with rigid workflows is necessary. Furthermore, patterns of devolution, or delegation to lower levels need to be engrained in culture.

Version Control

Software has consumed infrastructure and version control systems are now the source of truth. Software projects across an organisation are composed of several contributors. These projects will reuse components. For decades, version control has been a requirement of

projects that need to maintain source code. Given multiple environments are in the journey to production, the source of truth needs to be promotable — enter distributed.

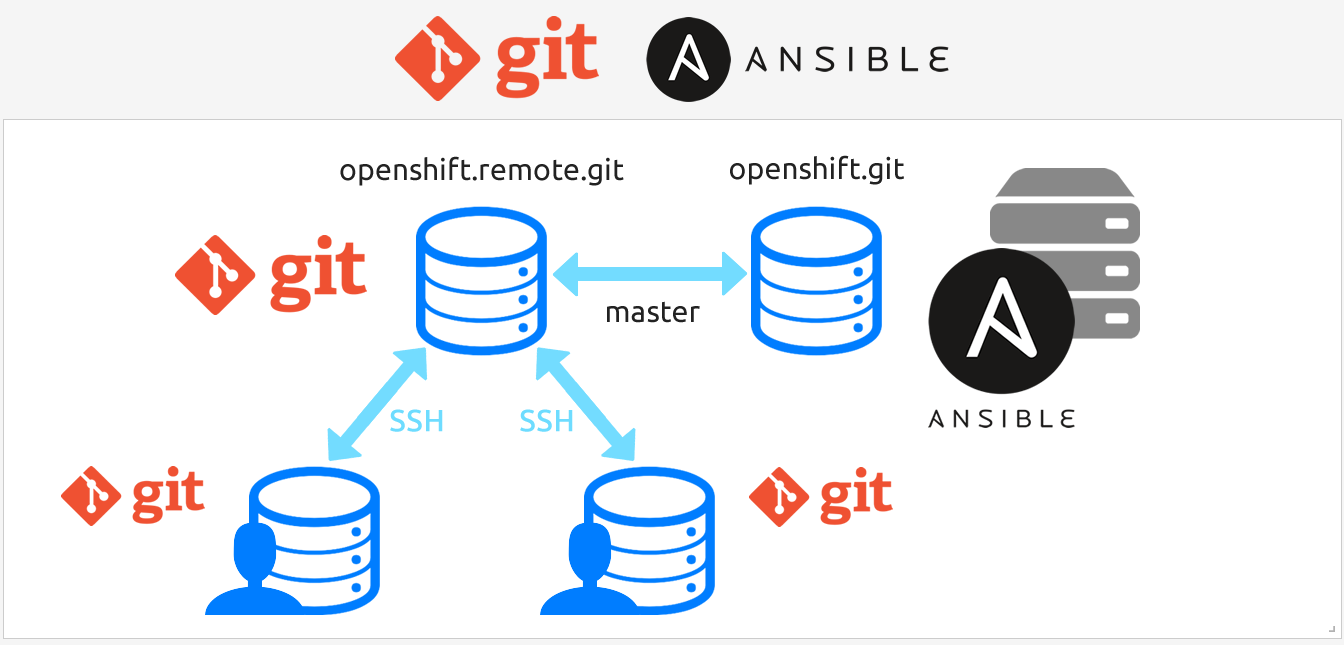

Git is a distributed version control system designed for contribution of 1000s of contributors to the Linux kernel code base. The flexibility it delivers is unmatched. Not only is it distributed, it offers a “fork and pull request” workflow. This workflow guarantees integrity of code bases by easily providing a mechanism for automated code integration and commits reviewed by someone other than the author. Further, anyone with access to the code base can submit a change request complete with code for inclusion by maintainers.

The Engine

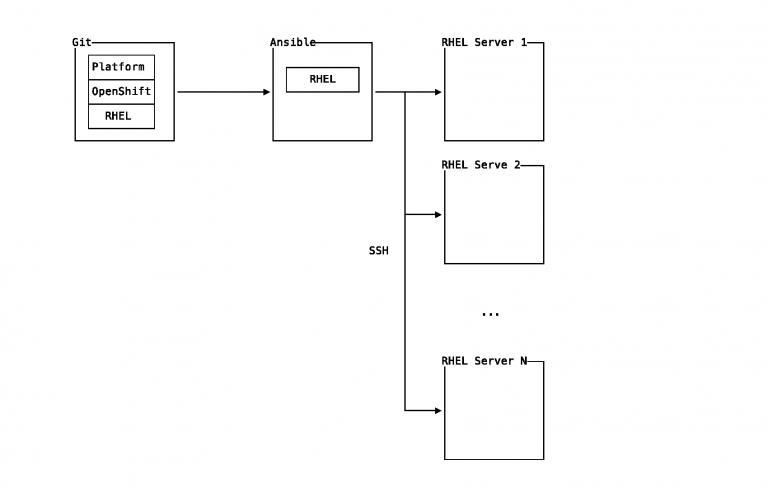

Declarative code is executed by a system known as Ansible. Ansible executes tasks known as “plays” in series from a “playbook” and “roles” presented as files in YAML format. YAML is human readable and whitespace aware with minimal syntax. Ansible roles are self-contained grouped content which contain — amongst others — dependencies, variables, and libraries.

Each YAML file is under source control in Git. Each release is tagged and promoted to the next environment in the workflow by pushing to the respective Git origin of that environment.

Provisioning

Creating server instances is no longer a ServiceNow ticket waiting for manual action by service desk personnel. Cloud providers and software such as VMware vCenter provide API access which Ansible supports.

Configuration Management

After an instance is created, further configuration is necessary. Ansible provides seamless transition from provisioning to configuration management. For example, it may be necessary to enforce versions of libraries or configurations of kernel security modules. Multiple workflows are supported. A reasonable approach could be triggering an audit after a series of roles have been run to ensure compliance.

Software Deployment

The end goal of a platform is to host software for consumption. Each layer of abstraction is responsible for the layers above. For instance, an OpenShift cluster is responsible for availability of all applications deployed on the cluster instance. Therefore, if a cluster instance is to be upgraded, development and testing by the applications homed on the affected cluster instance will be necessary.

The same is true for the underlying platform, or Red Hat Enterprise Linux. For example, if a new kernel is to be introduced, processes for ensuring availability of all applications resident on affected systems will need to be executed.

Self Service

Code developed for engineering and operations to support progress and availability can easily be made available for consumption by others on demand. Ansible Galaxy makes roles available for search and a requirement for others. For example, if a role requires Nginx, and the company provides one, it may be included.

For users who are not fluent with Ansible, Ansible Tower is available with a web interface. Users with access can select roles on a set of servers, referred to as “inventory”, and deploy the role to the inventory and receive expected results.

Finally, Ansible Tower provides a REST API. This API is available for use by users, platforms such as CloudForms, and monitoring tools. If a threshold is generated, a REST call can be made in response to the Ansible Tower endpoint. In turn, Ansible Tower will invoke a playbook.

Facebook Comments